Reimagining Balanced Assessment Systems

An important new book from the National Academy of Education can support state and district leaders in building balanced assessment systems.

Read More

An important new book from the National Academy of Education can support state and district leaders in building balanced assessment systems.

Read More

Our associates are giving many fascinating presentations at the assessment field’s two big spring conferences, NCME and AERA. Here’s a quick rundown.

Read More

Existing frameworks for universal design aren’t quite right for educational assessment, so we teamed up and designed a new one.

Read More

Before launching a project to build an “innovative” assessment for education, it’s wise to define what you want to move toward—and away from.

Read More

The NFL’s use—and misuse—of test scores in the draft highlights a few important lessons we should apply to educational assessments.

Read More

States complain that ESSA bars them from getting the accountability systems they really want. We present three examples of systems that show otherwise.

Read More

Through-year and other novel test designs face challenges in peer review. Three changes by the U.S. Department of Education could improve the process.

Read More

Like other 21st century skills, ethical thinking presents substantial challenges to educators who want to define, teach, and assess it.

Read More

Guidance on defining and assessing creative thinking, a skill that’s increasingly in demand among employers.

Read More

In this TikTok era, we break teachers’ learning into bite-sized pieces and expect them to learn on their own. But we accept this model at our peril.

Read More

“Failing to plan is planning to fail.” That’s particularly true when it comes to building assessments that are inclusive of students with disabilities.

Read More

An exploration of key considerations, and a framework, for exhibitions of learning.

Read More

We must share our knowledge broadly, free of charge, without restriction, to support an important public good: improving outcomes for all students.

Read More

Google’s recent release of Gemini showcases AI’s potential to reshape educational assessment. We must be open to these changes, while monitoring and managing their risks.

Read More

2023 was a busy year for assessment and accountability. Scott Marion recaps the year’s key issues and looks ahead to questions that loom large for 2024.

Read More

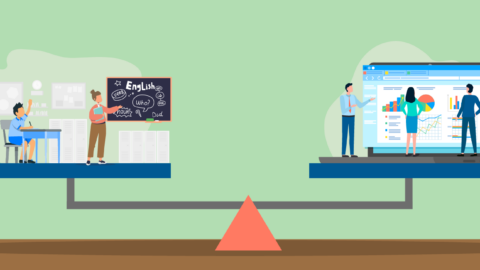

In this three-part series, we explore key aspects of system design that need to be properly balanced. In this last post, we explore formative vs. summative feedback.

Read More

In this three-part series, we explore key aspects of school accountability design that need to be properly balanced. In Part 2, we examine precision vs. actionability.

Read More

In this three-part series, we explore key aspects of school accountability design that need to be properly balanced. In Part 1, we examine simplicity vs. complexity.

Read More