In Search of Simple Solutions for the NAEP Results

Simple Explanations and Solutions are Appealing, but Insufficient to Affect the Changes Needed to Move NAEP Results

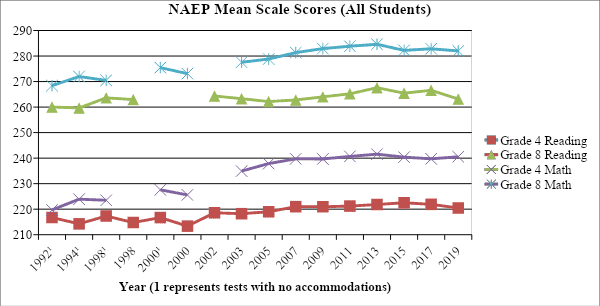

The 2019 NAEP results (National Assessment of Educational Progress) were released last week to much consternation, except perhaps in Mississippi and Washington, D.C., where improved results were celebrated.

Nationally, results were up slightly in fourth-grade math, flat in eighth-grade math, and down in both fourth and eighth-grade reading. These results continue a disturbing lack of progress over the last decade.

There may be several legitimate explanations—or at least partial explanations—for the poor results, such as the misalignment of NAEP expectations with the Common Core State Standards, the foundation of most state content standards, the lack of motivation for students living in an over-tested country, or changes in the tested population since 1992.

My concern, though, is with the rush to both causal explanations and simple solutions to fix our nation’s educational ills.

Humans Desire Explanations—Even Without Supporting Evidence

Henry Braun, a Center for Assessment Board member, likens the way many people craft explanations of phenomena to Kipling’s Just So stories. Humans are storytellers and we love creating stories to explain things we observe in the world—such as NAEP findings—whether there is any evidence to support those stories. We do this after the fact and seem to forget any a priori hypotheses we might have offered before the results were released.

Humans, especially Americans, generally view things with short time horizons. I used the NAEP Explorer Tool to produce the following graph depicting the national average scale scores for reading and math on each fourth- and eighth-grade NAEP assessment since 1992.

It appears there have been plenty of ups and downs through the years—rather than falling off the cliff as some headlines would have us believe—but the main takeaway is that we have seen very little progress—as measured by NAEP—since the early 1990s.

I point out this long-term view to challenge some of the Just So stories I’ve heard as blame for the 2019 NAEP results, including the Common Core State Standards, poor reading instruction, and, from Education Secretary Betsy DeVos, the overreach of the federal government.

Interestingly, I have not heard many people blaming the hours of screen time youth spend in front of their devices for the lower reading scores, perhaps because that might mean adults would have to model non-device behavior.

The Explanation Is: There Is No One Simple Explanation

Even if the trends were the cliff-dive that has been described, it takes much more than a trend to establish causal inferences. Establishing a defensible link between cause and effect requires a thoughtful experimental (or close proxy) design that allows the researchers to rule out other factors that might be contributing to the observed results.

This approach is a challenge in well-designed research studies, and virtually impossible with an observational monitoring study like NAEP. Educating children is an incredibly complex endeavor and explaining large-scale results is just as complex. It takes a lot of hubris to pick out one factor to explain the results, because even if it might be a viable explanation for one grade and subject (e.g., 4th grade reading) at one point in time, it is unlikely the same reason would apply to both grades and subjects across the last 30 years, thereby weakening the argument.

Secretary DeVos, in her speech on October 30th associated with the NAEP release, suggested that having funding follow students would help improve student achievement: “There’s a student-centered funding pilot program for dollars to support students—not buildings. I like to picture kids with backpacks representing funding for their education, following them wherever they go to learn.”

DeVos also touted Florida’s voucher, tax-credit scholarship, and charter programs as the reasons for Florida’s modest increase in performance over the years. But why didn’t she credit Florida’s high-stakes, top-down accountability system? My point is that it is almost impossible to isolate any reason that caused the results in such a complex system. Further, while there have been some limited associations of high-stakes accountability systems with statewide score gains, the free market approaches DeVos advocates have produced little positive evidence.

Jumping to causal leaps in a single bound often leads to simplistic explanations.

Evidence Plays a Critical Role In Enacting Any Real Educational Improvement

There are evidence-based approaches that should be considered if we are really serious about improving education at scale. I often come back to the simple elegance of Elmore’s (City, et al., 2003) instructional core:

There are only three ways to improve student learning at scale: You can raise the level of the content that students are taught. You can increase the skill and knowledge that teachers bring to the teaching of that content. And you can increase the level of students’ active learning of the content. That’s it… Schools don’t improve through political and managerial incantation; they improve through the complex and demanding work of teaching and learning (p. 24).

Elmore went on to emphasize that if you change any of the three aspects of the core, you must also change the other two. States, individually and collectively, have partially addressed the level of the content through more rigorous standards and testing. I say partially because to really increase the meaningfulness of the content (and skills) for students, we should be addressing curriculum like many high-performing countries, but the curriculum is generally off-limits in the U.S. We have not attended as well to teachers’ knowledge and skills and student engagement.

We have made faint attempts to deal systematically with improving teaching quality, but most would agree that state-led teacher evaluation has been a failure. Increasing teachers’ knowledge and skills requires systematic initiatives starting with recruitment into the field through ongoing professional learning opportunities. Finally, many are working to personalize students’ learning opportunities in an effort to increase their active learning, but we must do more. Many have rightly advocated for “wrap-around” services to support students’ multiple health, emotional, dietary, and other needs associated with poverty, systemic racism, and trauma.

It is beyond the scope of this post to go into detailed discussions of research-based educational improvement strategies except to point out that the real work of reform is complex and may require substantial changes to the current system. I am simply trying to illustrate the fallacies of pulling excerpts from a long-term trend, jumping to unsubstantiated causal inferences, and offering solutions based on beliefs instead of evidence. To paraphrase an often-heard quote, there is a simple solution to every complex problem, except it is wrong!

Dramatically improving student learning and increasing the equality of student outcomes is too important to be left to unproven beliefs. Ultimately, I do not think we can explain away the results. Even with better alignment and increased student motivation, it is unlikely we would see praiseworthy results. Instead, we should recognize that the NAEP results may illustrate how hard it is to improve a decentralized system at scale.

References

City, E. A., Elmore, R. F., Fiarman, S. E, & Teitel, L. (2003). Instructional rounds in education: A network approach to improving teaching and learning. Cambridge, MA: Harvard Educational Press. [see particularly, chapter 1: The Instructional Core].