But How Do We Know It’s Working?

Program Evaluation to Prove What We Think We Know

Program evaluation is a powerful tool to help us understand whether the things (e.g., initiatives, programs, interventions, self-help plans) we think are working are actually working. Rather than us assuming that something is going to work, program evaluation helps us establish a systematic plan that allows us to document the processes and outcomes we are supposed to see and identify the evidence that can help us measure whether we’re actually seeing those processes and outcomes come to fruition.

Program evaluation can be applied to almost anything from pandemic recovery and accelerating learning to your latest plan to eat better and get plenty of sleep. For example, I’ve recently come to find that I really enjoy obstacle course races (OCRs). And yes, program evaluation helped me prepare for my first OCR.

Program Evaluation and OCRs

OCRs (as I’ve learned) are challenging in a different way than a traditional road or trail race. They require a mix of endurance, strength, agility, and mental fortitude applied to a variety of skills to achieve the overall goal. Simplistically, you run various distances (e.g., 5k, 10k, 20k, 50k) while completing anywhere from 20 to 60 obstacles. More directly, just being able to run that distance won’t get you to the finish line. The joke in the community is that running is the easy part—the “active” recovery. Sometimes you have to climb walls or ropes, sometimes you have to lift or carry heavy stuff certain distances, or sometimes you have to traverse a series of monkey bars or other American Ninja Warrior-style setups (I’m partial to Spartan OCRs, but there are a few brands out there). Currently, I find this obstacle to be the most intimidating since it’s a combination of a rope climb, unstable monkey bars, and a lot of grip endurance:

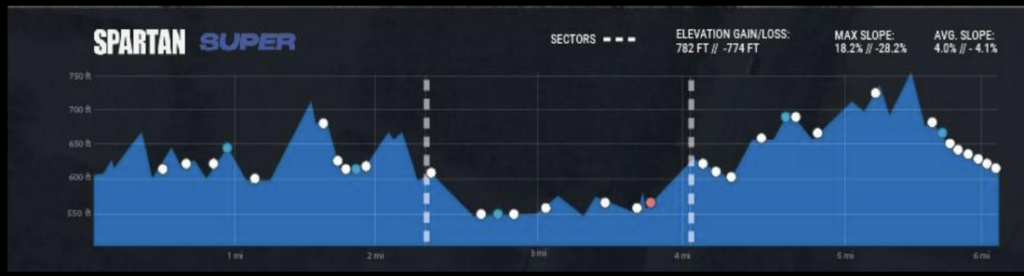

Considering the image above, it’s important to remember that this single obstacle is one of 28 in a series of a 10k race. However, that 10k does not include the obstacle length, just the race (so add 2-3k to the total distance moved). As a window into my current racing set, here’s a snapshot from a recent 10k that helps racers get a sense of what they should expect:

After a lot of research, I learned the kinds of exercises I needed to do to successfully complete an OCR. In order to feel sufficiently prepared, I needed two very distinct sets of information. First, I needed to know whether I was improving along the way in order to complete individual activities. Second, I needed to know whether I was actually going to complete the whole race. Enter program evaluation.

Program evaluation is a systematic method for collecting, analyzing, and using information to answer questions about projects, policies, and programs, with a particular focus on their effectiveness and efficiency (Shackman, 2018).

Program evaluation differs from research in that research seeks to understand whether X causes (or influences) Y. Research also hopes to often generalize findings to a larger population.

Program evaluation is more about whether a program that uses X is good, so to speak. Program evaluation is much more about whether something is effective in a given context and for a specific set of people. Generalizability isn’t always the name of the game.

Types of Program Evaluation

There are many types of program evaluation. Evaluation focus can range from process, outcome, financial, or impact. Evaluations can also take on different perspectives (e.g., evaluations focused on use, focused on critical theories, focused on phenomena, etc.). For the purposes of my OCR example, four relevant types of evaluation include the following:

- Formative evaluation: Evaluation to improve the design, development, or implementation of a program or effort.

- Process evaluation: Evaluation focused on how well the program has been implemented and whether the program’s procedures were effective. This type of evaluation occurs periodically throughout the life of the program. While it may not be intended to improve the program, it helps evaluators understand what contributed to the program’s or initiative’s outcomes.

- Summative evaluation: Evaluation intended to make a retrospective judgment about a program or effort. Summative evaluation typically happens after the program is concluded to understand whether the program had its intended effect.

- Impact evaluation: Evaluation that seeks to understand the long-term impact of a program by understanding whether the program brought about sustained changes.

In my case, I was interested primarily in formative and summative evaluation, but all four types of evaluation apply.

Formatively, how was my training helping me get faster, be able to do more playground activities (you’d be surprised how hard it is to do the monkey bars as you’re further removed from the playground days), or maintain mobility under load? Keep in mind, this type of information was about changing my activities to improve my training.

Process-wise, I was interested in taking temperature checks to see if I was improving toward my goal. Did my training routine mean I could climb a rope better? Was I implementing the right activities overall to get to my outcome?

Summatively, I wanted to know whether the combination of my training was going to let me successfully complete my first OCR (it did, btw).

From an impact standpoint, did I see an improvement in my overall lifestyle and health? In this case, yes, but more than likely, working toward any competitive race would have done the same thing. But perhaps the larger question is about sustaining a healthy lifestyle. If you were to ask me candidly, “do you think OCRs and their associated training are conducive to sustaining a healthy lifestyle?” the answer would be complicated. With enough knowledge and restraint, yes. But there are a lot of people who hurt themselves pushing too hard and ultimately injure themselves for the long-term. There are probably better ways to achieve the lasting impacts of health, wellness, and balance.

Internal and External Program Evaluation

Many grant applicants are familiar with the concepts of internal and external evaluators. Internal evaluators are those partners who typically are concerned with improving the program, monitoring implementation fidelity, and communicating the program’s processes and practices to stakeholders. This internal evaluation is closer to the idea of formative evaluation, or evaluation to improve the design, development, or implementation of the program.

On the other end of the spectrum, external evaluators are typically interested in the outcomes of the program, ensuring the funding was well-placed, and monitoring whether grant requirements are met. This external evaluation is closer to the idea of summative evaluation.

While internal/formative and external/summative evaluations are often walled-off from one another, both can be used to better understand the quality of program conditions and implementation in order to help our understanding of the why of what we are seeing with regard to whether the program had the anticipated effect on the overall outcomes we are trying to affect (e.g., student learning recovering at an accelerated rate; student engagement improving to improve student learning).

Program Evaluations in the Current Educational Problem Space

The same principles and types of program evaluation that helped me improve the process of my training and helped ensure I completed my first OCR successfully (and continue to prepare me for the next couple this year), can also be hugely powerful in monitoring the implementation and outcomes of educational programs or interventions.

Take for example ESSER funding for COVID recovery efforts, Innovative Assessment Demonstration Authority (IADA) grants, or the recent Consolidated State Grant Applications (CSGAs). Many externally funded or regulation-relaxing applications require evidence of program success at the conclusion of the grant or funding cycle (summative evaluation). But how well do you know that things are working along the way (formative and process evaluation)?

Nearly (if not) all states are in the middle of ESSER-related programs, implementing IADAs, or launching CSGAs. States are primed, therefore, to maximize the benefit of formative evaluation in order to better understand whether those programs are being implemented well and how they can be improved on-the-fly. Furthermore, states should also be striving to establish summative evaluation plans to understand whether (and why) our programs had an impact. We are leveraging an unprecedented level of funding in education and it isn’t just about justifying the money spent on our efforts. We need to monitor whether our just-in-time investments are actually helping student learning and educator efforts during our investment to maximize efficiency and capitalize on the money available.

Similar to my own, much less socially beneficial example of OCRs, there’s little reason to waste time on activities that aren’t contributing to our goal. Program evaluation helps us identify those activities and do so in a way that benefits the process as well.

In a future post, I will describe the steps involved in planning a well-designed evaluation based on a solid program theory and program logic (see my previous post on logic models and patios here).