Enhancing the Impact of Performance Assessments on Instruction

A Research-Based Framework Monitoring the Use of Performance Assessments to Promote Positive Instructional Change

This is the fourth post by one of our 2021 summer interns based on their project and the assessment and accountability issues they are addressing this summer with their Center mentors. Sarah Wellberg, from the University of Colorado Boulder, is working with Carla Evans to investigate issues related to the relationship between the use of complex performance assessments and efforts to promote instructional practices that improve student learning. This is the second post by Sarah and Carla. In their previous post they discussed the importance of understanding the relationship between key instructional practices and the interactions among teachers, students, and content.

There is renewed interest in using performance tasks as part of state and local assessment systems. One common reason for including these more complex and authentic assessments is to incentivize teachers to alter their instructional practices. Policymakers who are interested in using performance assessments to spur changes in instructional practice need to be acutely aware of the assumptions that underlie those changes and should plan to monitor the proposed use of performance assessments to ensure that it promotes positive changes in instruction.

New policies that aim to impact instruction need to account for the dispositional and capacity barriers that teachers may encounter, which we outlined in our previous post. Policies should include advanced planning for how professional development will support shifts in teaching practices by directly addressing common barriers.

Large-Scale Efforts to Promote and Monitor Instructional Changes

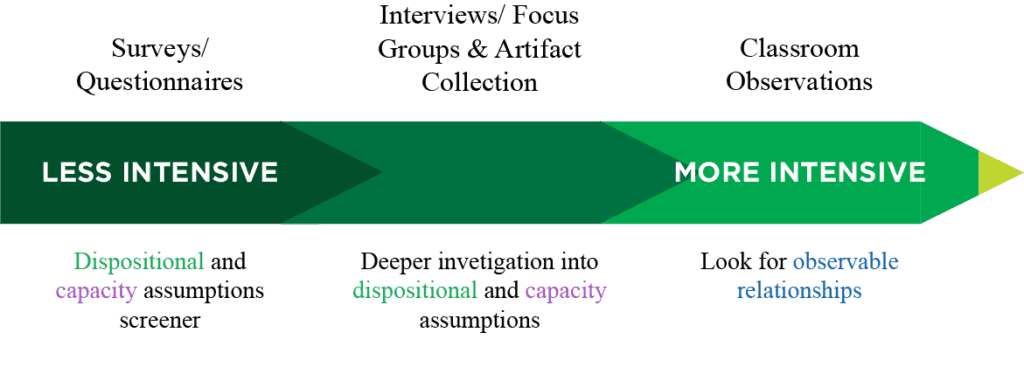

States, districts, and schools that are interested in monitoring the impacts of performance assessments on classroom instruction can use our research-based instructional change framework to progressively gather more detailed information on the extent to which the underlying dispositional and capacity assumptions hold prior to direct observation. This progression is depicted in Figure 1.

Any large-scale efforts to monitor instructional change could easily begin with a survey to measure teachers’ (and/or students’) attitudes/dispositions surrounding the six practices identified in our previous post and their perceptions of their level of access to the resources, supports, and/or training required to implement the practices with fidelity.

- If the surveys indicate that the assumptions are not being met, then more detailed interviews or focus groups could be conducted to illuminate the barriers that teachers are experiencing. This information could be used to provide additional supports and resources to teachers.

- If the survey results suggest that many of the assumptions are met, interviews or focus groups could probe deeper to confirm that this is the case and to identify the types of capacity-building supports and resources teachers found most helpful. Artifacts, such as examples of instructional tasks or pieces of student work, could also be collected at this stage. Doing so would allow stakeholders to confirm that the teachers do in fact have access to, and are using, high-quality materials.

- Because self-report surveys and interviews may be influenced by social desirability bias, memory effects, or other threats to internal or external validity, direct observation of lessons is likely required to get an accurate picture of classroom practice. Observations, however, are significantly more time-intensive and costly when conducted at scale than are surveys.

Classroom observations would be the last step in collecting information about how teaching practices have shifted as a result of the performance assessment ‘intervention’.

- We know that if the underlying dispositional and capacity assumptions do not hold, then it is unlikely that the desired relationships between the student, the teacher, and the content will be observed.

- Even if the assumptions hold and teachers are using high-quality tasks, it is common for over-scaffolding to reduce the rigor of tasks during implementation. Direct observation is, therefore, the best way to determine whether the teachers are implementing tasks with fidelity and whether collected samples of student work likely resulted from students doing the bulk of the intellectual work themselves.

Promoting and Monitoring Instructional Changes at the Local Level

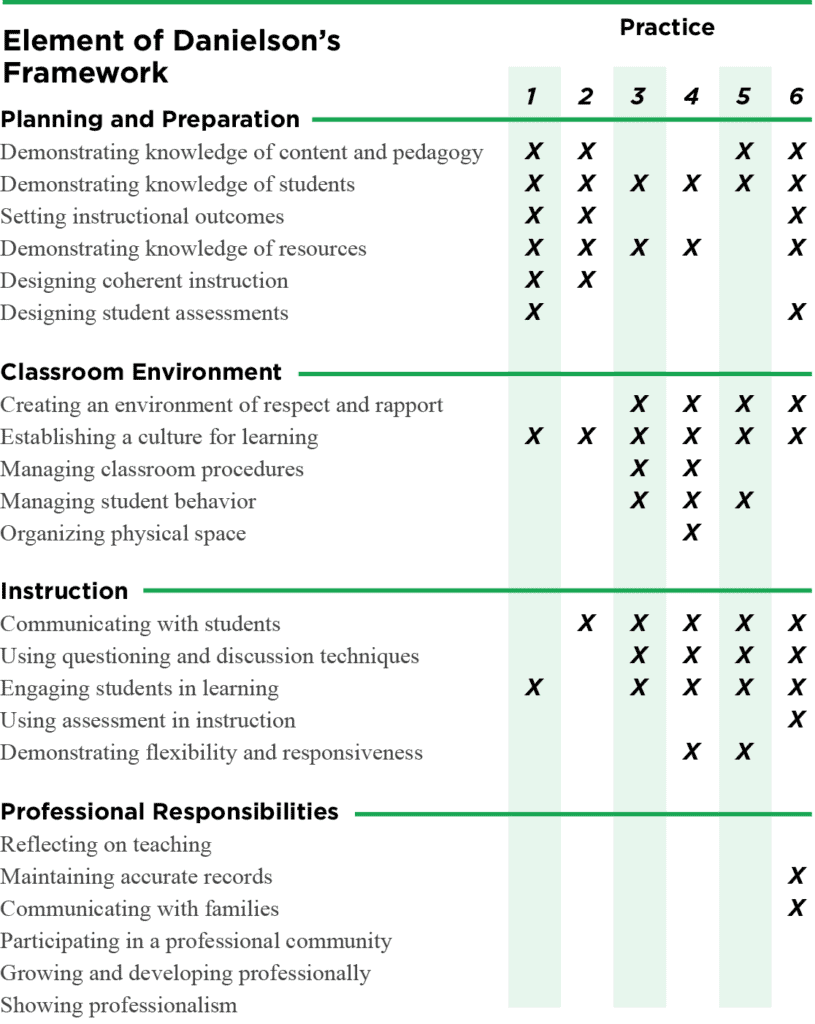

Our framework may also be helpful for principals and other building administrators who wish to promote the use of these practices directly with teachers. Many schools and districts use Danielson’s framework during their teacher evaluation cycles, and the six key instructional practices we identified in our previous post relate closely to the elements found in this common framework (see Table 1). It follows that those teachers who are using these practices effectively are likely meeting most of the requirements for quality teaching that Danielson’s framework has identified.

If the evaluating administrator does not observe the specified relationships between the student, the teacher, and the content during their evaluative classroom visit, they may ask the teacher about the assumptions during their debrief meeting. This can help administrators determine the barriers that teachers are facing, and they can arrange for appropriate, targeted professional development and the acquisition of needed resources.

Note: The practice numbers are as follows: (1) Using high-quality questions and prompts, (2) Integrating components of knowledge with habits of thinking, (3) Actively engaging students in learning, (4) Learning through discussion, (5) Eliciting and interpreting student thinking, (6) Giving students multiple opportunities to showcase their knowledge and abilities.

Conclusion

Assessment does not operate in a vacuum. Curriculum, instruction, and assessment should be coherently linked through a common model of learning and alignment to the state content and performance standards. Embedding performance assessments in high-quality curricular materials for both instructional and summative classroom information is critical to supporting the deeper learning policy goals espoused by many.

Investigations into instructional changes tend to involve cost- and time-intensive methods such as interviews and classroom observations. We have put forward a strategy for monitoring likely changes in instruction, which begins with a more efficient way to measure the assumptions that need to hold in order for those practices to be used successfully.

These assumptions may also be addressed during the development of a new policy. By explicitly including plans to provide both curricular replacement units (Marion & Shepard, 2010) that teachers can use, and professional development around cognitive development, learning theories, and instructional strategies, policy-makers can increase the likelihood that teachers will adopt the intended instructional practices.