User-Friendly Documentation and Validation Processes (Part 1)

Supporting A Vision with Documented Strategy and Tactics

Professionally and personally, we often have projects or tasks where we define desired goals or outcomes, and sometimes even identify the steps that it will take to get from where we are to where we want to be. However, we often end up frustrated and short of our goal because we have not fully documented the strategy and tactics required to achieve the desired outcome.

Enter the difference between specifying an outcome for Halloween (i.e., a rad costume), and the strategy and tactics necessary to make it happen.

Scene: October 30, 2021. Midday. My son wanted to be Fortnite™ bush, which is exactly what it sounds like. Basically, if you crouch, you appear like a bush. Here’s what it looked like:

The outcome was very clear. He wanted to be a bush.

I might have had a plan in my head about how to make said bush, but my near 12 year-old son did not. So, I prompted him to describe how he hoped to achieve his desired outcome. Exactly what was his plan?

Following that discussion, I asked him to describe how he would operationalize that plan. It was a great bonding experience and reminded me of the clear differences between the general nature of strategy versus the specificity of the tactics used to execute the strategy. He had a strategy, but the tactical plans of finding and buying the right glue gun, sourcing a helmet, buying a Styrofoam cap, finding 400 strands of ivy, and putting it all together was far from clear.

Ultimately, we succeeded, as you can see from the picture above, but more lead up time and a few diagrams might have helped the planning and execution processes. I’ll provide two other examples grounded in a combination of strategic and tactical project planning and that are closer to the education world shortly, but I would like to first define two high-level concepts: Strategic planning and tactical planning.

Strategic planning is focused on defining the vision of a project (or process or initiative) and on identifying the goals you are trying to meet. It is a big-picture view of what you want to do and the overall prioritization of those “whats,” but it doesn’t really tell you the how. Strategic planning is supported by things like visioning statements, identification of key outcomes, and prioritizing initiatives. But strategic planning isn’t really planning. It’s prioritizing and focusing on the “what,” and sometimes the “so what?” But strategic planning doesn’t address the “how” question.

Tactical planning, on the other hand, addresses how the project will be completed, the outcome will be achieved. Tactical planning is all about operations. It focuses on who will get the work done, what needs to get done, when it will happen, and exactly how it will happen. Tactical planning is supported by things like logic models, Gantt charts, task templates, meeting agendas, meeting schedules, RACI matrices, and identifying people and points of accountability.

Both strategic and tactical planning require a consideration of grain size. But perhaps more importantly, they are grounded in systems of documentation and understanding the roles that processes and procedures play in order to develop tactical plans that adhere to the larger strategy. This documentation should then be evaluated and informed by the same criteria as balanced assessment systems: coherence, comprehensiveness, continuity, efficiency, and utility, which I will describe in part 2 of this post.

Applying Strategy and Tactics to Large-Scale Educational Accountability

Another testament to the importance of strategic and tactical planning is the role that it should play in accountability design, development, implementation, and evaluation. A recent career focus of mine has been the systematic evaluation of accountability systems under ESSA. The Evaluating Accountability Systems under ESSA Toolkit is a relatively comprehensive resource that dives deeply into the policy, practical, and technical issues associated with state accountability systems.

The Toolkit is designed to help states reflect on how their state’s accountability system under ESSA is functioning and (where applicable) identify strategies for continuous improvement. It ostensibly provides states with a “roadmap” for developing an initial plan to validate their state accountability system. However, this roadmap requires a significant amount of knowledge and capacity to understand the turn-by-turn directions to navigate the Toolkit. A super-user may be able to navigate the various modules in the Toolkit without support, but most states would benefit from a 30,000 foot walkthrough, an identification of the most relevant areas of exploration, and a Sherpa to guide them in exploring the policy, technical, and utility checks that start in the clouds and eventually take them into the weeds. The Toolkit enables states to select their own starting point, but the roadmap is not quite yet readily accessible to all drivers and navigators.

The tool encompasses eight areas of foci that explore each of the following aspects of a state accountability system under ESSA: (1) the system theory of action, (2) the state’s system of annual meaningful differentiation, (3) how indicators interact in the system, (4) an exploration of the individual indicators, (5) an evaluation of Comprehensive Support and Improvement schools, (6) an evaluation of Targeted and Additional Targeted Support and Improvement schools, (7) reporting, and (8) the state support system.

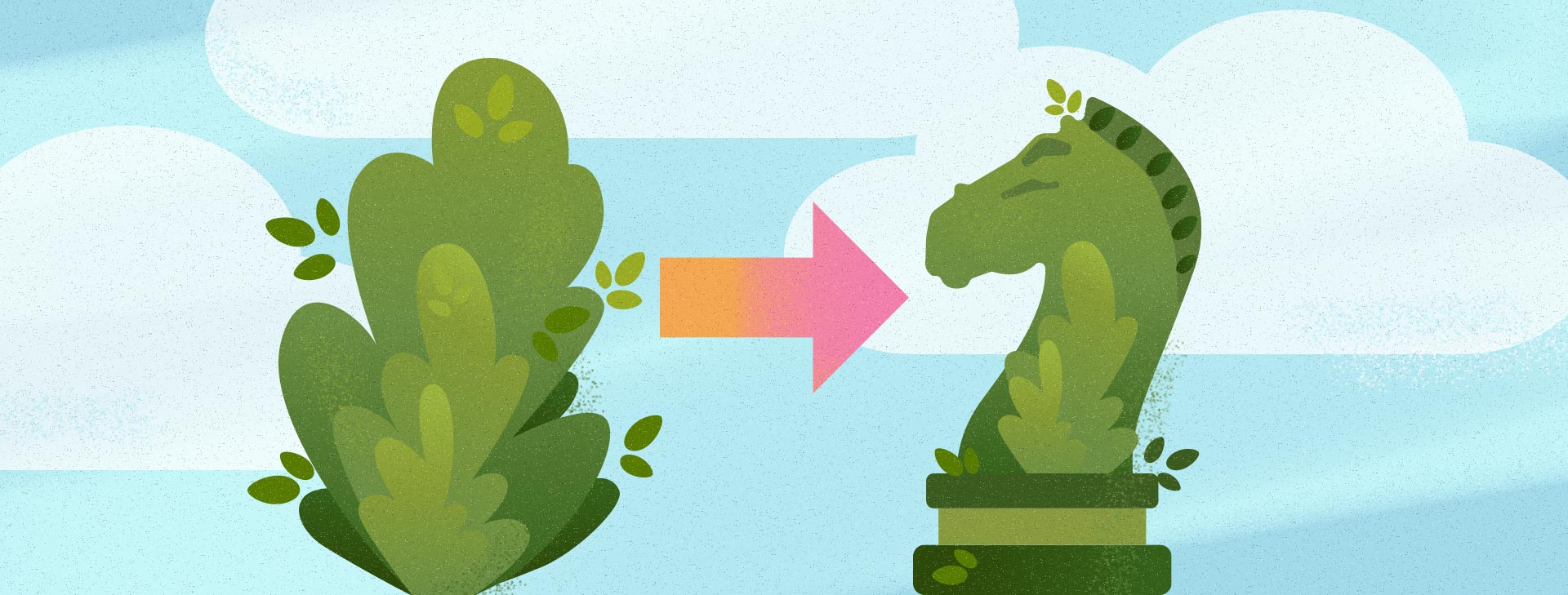

While it is a comprehensive resource, it is limited in its utility given its current format. The limited utility is perhaps most evident from the organization of the tool juxtaposed to the actual workflow of the decisions, as presented in the two figures below. In Figure 1 below, the Toolkit is presented as a sequential process that begins with examining a theory of action, explores the overall system design, and then branches into its component parts, ultimately leading you to an examination of reporting and support structures. Figure 2 perhaps more accurately depicts the interrelated nature of each of the modules and highlights the incremental and iterative nature of the exploration. The evidence collected across modules builds evidence of system quality, but states have the flexibility to jump in and out of the Toolkit based on the highest priority areas. You can find the tool and its resources here: (D’Brot, LeFloch, English, & Jacques, 2019).

versus

Specific to our development of the accountability system evaluation Toolkit, we thought that it was perhaps more important for state leaders developing accountability systems to see the branching nature of the workflow. Arguably most importantly, we wanted to convey that the workflow must begin with a clearly specified theory of action (or at least articulating a well-specified set of outcomes or policy goals) and that a system’s grounding in a theory of action is vital regardless of how its workflow is displayed. In retrospect, we perhaps should have been more explicit in describing what was in the workflow in greater detail and in explaining that the validation process is iterative, interconnected, and ongoing.

The point is, the documentation for the Toolkit is coherent, comprehensive, continuous, and efficient (i.e., provides a high-level roadmap) for the development of accountability systems under ESSA (and arguably for other accountability systems locally and beyond ESSA), but it can be made far more useful through (a) additional layers of information and (b) greater integration across those layers.

Improving the Utility of Documentation by Making it More User-Friendly

Having stepped away from the Toolkit for several months, it is evident to me that there is a tremendous amount of horizontal coherence in the document set, but there is little vertical coherence (mostly because there’s very little vertical structure to it). That is, the Toolkit and its resources are flat and wide at a small grain size. A significant benefit to improving the Toolkit’s utility may come from creating a vertical structure that can enable a much more user-friendly view of the resources, and maybe more direction for its use.

In a separate project, my colleague Erika Landl and I worked on a toolkit that can help states and districts select and evaluate the use of interim assessments. In an effort to maximize the interim toolkit’s utility, it will ultimately rely on a vertically coherent set of documentation and resources that looks something like this:

I recognize that the production of documentation is time-consuming and often difficult, but a single-layer of documentation is often just the minimally viable product—like a high-level roadmap without informative turn-by-turn directions—to memorialize something and begin creating a legacy of use. However, there are exemplars, models, and strategies out there to make resources more usable and impactful. This process starts with creating a value proposition and clear purpose statement at the top, and incrementally working your way down the layers while making it very clear to users what each layer is intended to do. I will attempt to lay this out in a future post focused on the accountability systems evaluation Toolkit.

Although some examples are available, more research-based information is needed. In a recent project initiative, I have been thinking through how strategically prioritizing work and then operationalizing plans through tactics and actions actually happens. The literature on strategic planning is substantial (e.g., see Allison and Kaye (2005), Ansoff (1980), or Porter (1980), to name a few), but resources supporting the act of engaging in strategic planning are light. If you’re so inclined, Patrick Lencioni’s work is a great resource to get you started (see 5 Dysfunctions of a Team). But resources that focus on the transition from strategic planning to tactical implementation are even harder to find. There are isolated resources that inform steps along the process, such as building a logic model (e.g., Kellogg, 2004; Shackman, 2015) or to help monitor the implementation of a program (e.g., Bryk, Gomez, Grunow, & LeMahieu, 2015), but the use of these resources and frameworks typically requires external expertise and guidance.

I’m advocating for the development of high-utility resources and documentation to drive greater use by a wider array of audiences. This documentation may be as important as the work itself.