The Rocky Landscape of Validity Arguments

Considering Validity Arguments within the Context of Effective Communication

Educational stakeholders are often skeptical that the information local and state assessment systems produce is trustworthy, meaningful, and actionable. Assessment professionals must navigate a complex conceptual terrain of scientific best practices, practical constraints, and policy considerations to overcome this skepticism. Curating relevant evidence and translating it into evidence-based stories – validation arguments in the technical literature – presents a variety of communication challenges that cut across different genres, audiences, and purposes (see Bachman, 2005). I want to reflect on this topic in the context of the conversations that our Center team had about assessment communication during its recent annual Brian Gong Colloquium in Boulder, CO.

Setting Out on a Validation Journey

I am a decidedly urban person who loves performing arts of various kinds, good restaurants, cozy hotels, farmers’ and Christmas markets, European travel, and all kinds of modern-day conveniences. Nevertheless, I also appreciate the natural beauty that is present in different areas of North America. Following the Colloquium, I took the opportunity to explore the majestic Rocky Mountain National Park near Boulder:

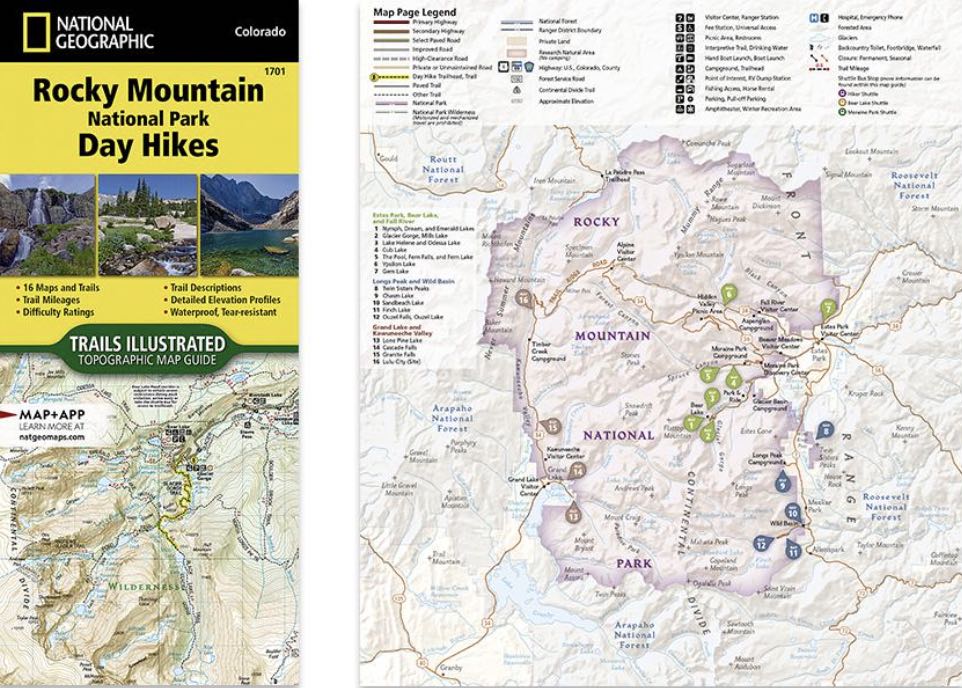

Convincing stakeholders about the value of educational assessments is a bit like charting a path through these mountains with frameworks for validation arguments serving as a topographical map and scientific best practices reflecting the main established pathways through the terrain. However, it is intuitively clear that staring at a map of mountainous terrain is not the same as admiring the real thing – it is a simplification and abstraction, after all, providing specific navigational supports and critical information about individual places:

In fact, one needs different kinds of maps for different purposes, just as one needs different kinds of validation arguments for different purposes and discourse communities (i.e., audiences). For example, a map for hiking or skiing focusing on trails and slopes, respectively, will foreground different properties of an area than a map for driving through a park with food and shopping options indicated or a map that shows the biodiversity of an area. Anyone who has ever picked up an unfamiliar type of map for unfamiliar terrain knows that it takes a bit of time to get oriented – learning the geographical boundaries, visual cues, and orientations of a map.

Ideally, we would be able to switch from one representation to another seamlessly, just like switching views on Google Maps for instance. In terms of validity argumentation, we would be able to look at claims, supporting evidence, alternative explanations, and overall architectures seamlessly across different documents and communicative contexts – a tough challenge indeed!

Getting the Right Equipment and Skills

Having a map does not mean that one becomes an experienced explorer overnight – that takes skill, experience, and guidance and is more about grounded practice than conceptual knowledge. As some of my colleagues at the Center have noted (e.g., Marion, 2022), practitioners who are faced with the challenge of defending various kinds of claims/arguments to various stakeholders have to think very pragmatically about validity argumentation. That is, they have to design and implement studies, review the literature, analyze data, talk to colleagues about evidentiary patterns, make strategic decisions, and so on.

For example, consider the context of a state assessment. An assessment contractor may provide a complex validation argument that foregrounds the technical properties across all design and implementation cycles of an evidence-centered design approach. Their work typically focuses on technical properties of assessment scores to ensure accurate and consistent reporting of scores and feedback as well as on the various task and reporting characteristics that ensure fair and accessible performance conditions for all learners. They are typically more concerned with supporting an interpretation argument and draw upon a variety of internal research and development evidence, documented in comprehensive technical reports that are often reviewed by state technical advisory committees. Similarly, derivative work may be presented in peer-reviewed venues such as journal articles, book chapters, or conference presentations.

In contrast, state assessment directors typically focus on the use argument for assessment scores at different levels of aggregation, briefly referencing the support for score inferences as needed. They will typically focus on the power of assessments for monitoring group-level trends in learning – a topic of current relevance due to pandemic impact and associated recovery-of-learning efforts – or their power to demonstrate that equitable outcomes of learning have been achieved for certain subgroups. This kind of validation argument is typically documented in slide decks, press releases, and internal reports or memoranda providing strategic guidance.

Selecting a Guide to Find the Right Path

Validity argumentation for an assessment program across discourse communities is highly distributed across a wide variety of text types and genres such as technical reports, academic papers, presentations, press releases, and much more. Crafting the various components of validity arguments is complex work and doing so effectively requires quite a bit of support – it takes a village (in the mountains) indeed!

Each communicative effort occurs within a particular discourse community and genre that are guided by their own sets of conventions (see, e.g., Slomp, 2016). Most importantly, there is no singular “appropriate” set of genre conventions that work for any discourse community. It is paramount to understand the particulars of the discourse community context one is operating within before creating a particular validation argument noting that these arguments can change over time, even for the same community.

Professional standards in our field (e.g., AERA, APA, & NCME, 2014) reflect aspirational statements about technical properties for assessments. These kinds of technical standards, however, are more akin to books explaining road signs without providing a clear map onto which they are situated. Given the varied and changing demands of different discourse communities, it is no wonder that many practitioners find it challenging to translate these statements into practical guidelines to inform their work. Technical advisory committees can provide helpful guidance in these matters (e.g., D’Brot, 2022).

Making the Most of the Journey

As the famous colloquialism goes, “the journey is more important than the destination.” So how do we make the most of our journey across the assessment information space? Which pieces do we select and how do we arrange them? How do we involve relevant parties that help us make new connections and see parts of our world in a different light? In terms of technical documentation, newer approaches to validity-oriented technical reports that foreground argument components rather than the technical details are certainly promising. These kinds of reports highlight particularly well that the same kinds of foundational ingredients are typically repurposed in different argument components.

As with other communicative efforts, it is perhaps most important to view any kind of technical documentation as part of a broader engagement strategy that requires reciprocity, iteration, and refinement over time. We should not be debating critical components of validation arguments in silos across departments, organizations, or stakeholder groups (see Ryan, 2002). Instead, it is essential to co-construct these arguments across teams to clarify issues, sharpen arguments, and refine understandings from a mutual learning perspective. If assessment validation is seen mostly as artifact-based (i.e., producing technical documentation) rather than process-based (i.e., engaging in an ongoing communication) then the resulting argumentation will fall short by design.

Time to Leave

It is time to end our quick journey through the conceptual landscape of validation arguments. Hopefully this journey left you thinking about a few key markers on our path:

- Validity argumentation is best viewed as a complex ecosystem of interactive communicative efforts that need to be carefully mapped and navigated (terrain)

- Each communicative effort is guided by conventions within discourse communities that take skills, practice, and guidance to navigate (toolkits)

- Different validation arguments re-use, adapt, and re-organize common pieces of information from the R&D process for the program to create appropriately convincing arguments for different discourse communities (journey)

- Engaging diverse, interdisciplinary stakeholder groups in the co-construction of validation arguments deeply enriches the process and resulting products (community)

Even though these markers do not amount to a simple “recipe”, they serve as valuable reminders to take a systemic, dynamic view on validity argumentation as a form of interdisciplinary communication. They help to recognize the complexity, roughness, and vastness of this conceptual terrain that needs to be navigated and when to ask for support.