Connecting Evaluation Principles to Improvement Science

Developing Processes and Programs to Improve Educational Outcomes

This post addresses the need for evaluation research to complement improvement science research; it’s critical to understand whether changes made to educational environments are effective in influencing improved and desired educational outcomes.

As my colleague Juan D’Brot described in a recent post, a well-designed program evaluation is critical to answering the question, But How Do We Know It’s Working? Equally important is having a carefully thought-out and well-designed program to evaluate. Over the past 20 years, educators have relied on third-party evaluators to determine whether a program works, for whom it works, and under what conditions it works.

The Institute of Education Sciences, formed in 2002, played a major role in influencing how large-scale programs are evaluated today and what counts as “strong evidence” of effectiveness. Although rigorous evaluation research (i.e., evaluations using experimental and quasi-experimental methods) tends to be lengthy, tedious, and expensive, it provides an important complement to rapid-cycle improvement science research. An experiment will not inform day-to-day classroom instruction like improvement science can; however, it does provide important policy and program information to drive long-term school improvement.

Begin by Identifying and Defining the Problem to be Solved Using Improvement Science

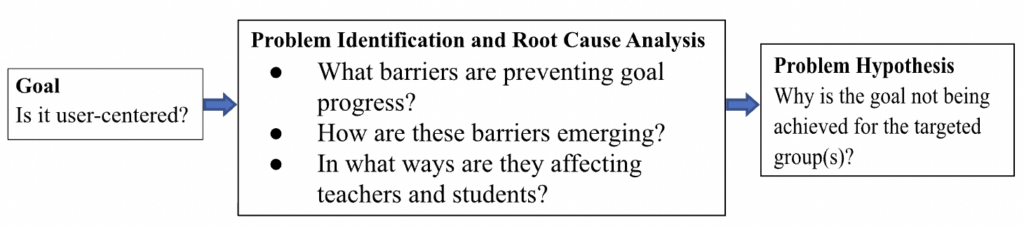

Improvement science depends as much, if not more, on first identifying the problem to be solved and then thoughtfully designing and implementing a program to solve it. In his book, Learning to Improve, Anthony Bryk and his colleagues argue that the first step to improvement is to specifically identify the problem to be solved. A well-defined problem is important because it focuses stakeholders on identifying root causes – i.e., the structures, processes, and behaviors that produce and sustain the problem (Bryk, 2015). To address a problem at its core, an organization starts by identifying root causes. Neglecting to address problems at their root explains why so many research-based solutions fail to address systemic problems at scale: school contexts don’t share the same roots; they are too diverse, unique, dynamic, and elusive for any one solution.

The Improvement Framework

Once a problem is well-defined and its root causes well understood, the improvement process essentially boils down to three core questions (Langley, et al, 2009):

- What is our goal? What are we trying to accomplish?

- What change might we introduce and why?

- How will we know that a change is actually an improvement?

These three questions establish the starting point for any education agency attempting to design and improve a program.

The first question identifies the target. The second question addresses how a program/initiative should work in theory. And the third question prompts stakeholders to think carefully about the evidence needed to ensure that the program is working as designed. These questions provide a helpful organizing framework to drive the systematic evaluation of problem-solutions and to inform improvement.

Applying the Improvement Framework to Improve Student Learning

What is our goal?

Research suggests that any program seeking improvement in student learning must address the instructional core.

The only way to improve student learning at scale is to (1) raise the level of the content that students are taught; (2) increase the skill and knowledge that teachers bring to the teaching of the content; and (3) increase the level of students’ active learning of the content (City et al., 2003, chapter 1).

Goals that fail to prioritize any of these three ends are destined to fail when success is defined as improving student learning at scale. Thus, having a user-centered goal – i.e., one that focuses on what is happening with students and/or in the classroom – is a necessary element of improvement.

What are we trying to accomplish?

Additionally, the goal should be specific, directing stakeholders to identify the problems and associated root causes that prevent improvements from taking hold.

For example, suppose a district’s goal is that all students read at grade level by the end of grade 3. The resources and underlying mechanisms that support such a goal would include high-quality curriculum resources, coordinated screening procedures, diagnostic testing, and multi-tiered support strategies, among other elements.

In this case, the goal directs stakeholders to carefully examine where along the preK-grade 3 pipeline critical resources and supports are not reaching classrooms or students. Policies and procedures may then be reviewed to identify what barriers are introduced along the pipeline and how those barriers prevent progress for specific groups of students. Only then can stakeholders begin to generate evidence-based hypotheses about why the goal is not being achieved.

What Change Might We Introduce and Why?

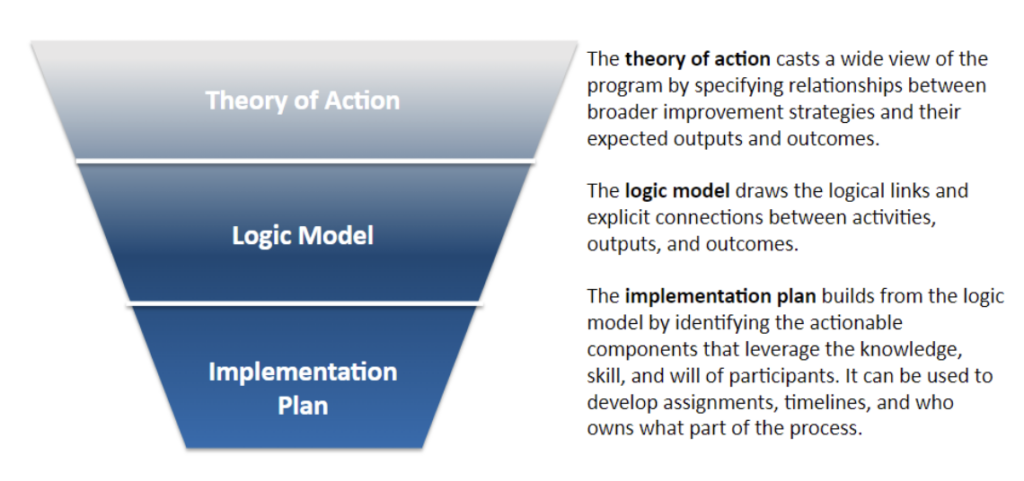

This question guides the development of a well-specified program or solution for improvement, which includes three steps.

- Develop a theory of action, which addresses identified problems and root causes to achieve the desired goal. A theory of action may be stated as a hypothesis in “if-then” form: If we do X, then Y should happen, which, in turn, should produce Z.

- Build the program logic. A logic model operationalizes the theory of action. It specifies the resources, activities, outputs, and outcomes that comprise a particular hypothesis. It creates a causal chain to link observable activities and their requisite outputs which, in turn, should influence a specific set of outcomes. Logic models are important resources for evaluators because the added detail allows the evaluator to connect specific tools to examine the implementation process and investigate for whom, how, and under what conditions a program works as designed.

- Develop the program’s implementation plan. The implementation plan specifies tasks, timelines, and assigns ownership to distinct elements of the implementation process.

Figure 1 provides a visual depiction of the three-step process.

How will we know that a change is actually an improvement?

The next step is to establish a plan to evaluate whether our program is actually leading to the desired improvement, which requires us to connect measures to the key activities, outputs, and outcomes in the logic model. With a well-specified logic model, measures may now be identified and used to determine whether a proposed solution is producing the expected changes along a causal chain of events. Though outcome measures likely exist, measures that examine implementation often need to be developed, piloted, and refined over time.

Illustrating the Process with an Example of Accelerating Learning

Problem: We now have evidence from states and assessment vendors suggesting that COVID influenced unfinished learning and exacerbated achievement gaps among some of our most vulnerable student populations. Thus, many districts are laser-focused on accelerating student learning to address the problem of unfinished learning and widening achievement gaps.

Goal: Accelerating student learning is a complex and nebulous problem. However, we do have strong evidence that formative assessment is one of the best-known ways of accelerating learning when implemented with fidelity. Therefore, many state and local agencies are actively working toward the goal of improving teachers’ use of research-based formative assessment practices. By doing so, educators hope to accelerate student learning, particularly for underachieving students and under-served student populations.

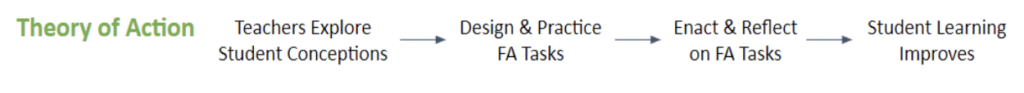

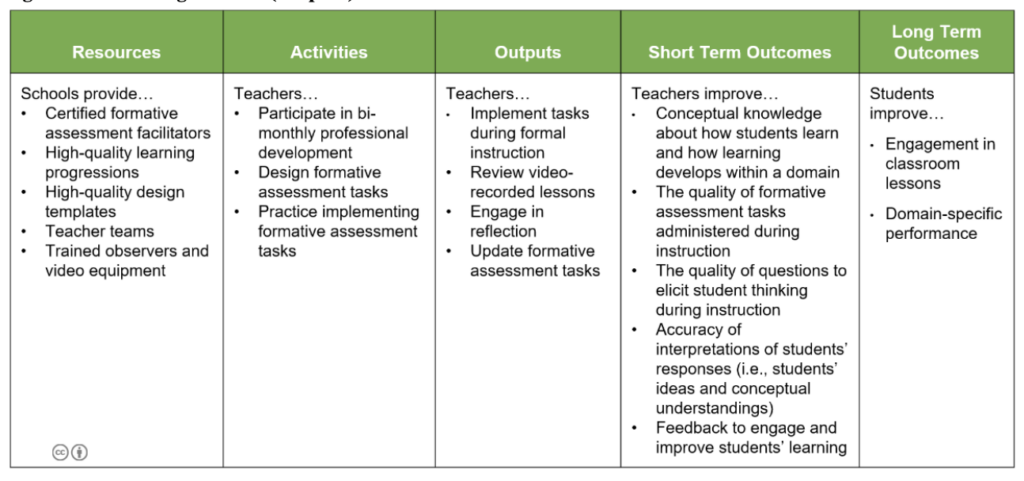

Theory of action: A few years ago, Erin Marie Furtak and her colleagues at the University of Colorado tested a formative assessment program that was designed to accelerate and deepen students’ understanding of a science unit on natural selection. The program is called the Formative Assessment Design Cycle (FADC) (see Figure 2).

The program’s theory of action states

- IF teachers review assessments and student work,

- AND carefully examine students’ misconceptions against pre-established learning progressions,

- AND design project-based tasks and activities to elicit students’ thinking (aloud and in writing),

- AND reflect on these tasks and activities by reviewing student work and recorded lessons of students’ conversations,

- THEN, teachers will develop content expertise and the ability to identify and clarify common student misconceptions “on the fly,” pushing students’ thinking forward,

- IN TURN, students will be more engaged and better prepared to think deeply and accommodate new information,

- RESULTING IN deeper learning and higher student achievement.

Logic model: The Logic Model presented in Figure 3 breaks the theory of action into a series of components along a causal chain. It identifies key resources, activities, outputs, and outcomes required to empirically test the theory of action. Teachers explore student conceptions through a year-long series of bi-monthly professional development (PD) sessions. During these PD sessions, teachers design lesson plans and develop formative assessment tasks. Teachers also spend time practicing these formative assessment tasks with other teachers before formally implementing them in their classrooms. When they are ready, teachers enact the lessons and formative tasks with students and later reflect on how it went. This cycle of learning, practice, implementation, and reflection is hypothesized to improve student learning.

Implementation plan: This type of program requires a coordinated effort across district and school-level staff. A full implementation plan is beyond the scope of this post; however, you can imagine that pulling this off requires coordinated leadership and project management to gather and deploy resources and ensure that activities such as teacher professional development is implemented by qualified experts over a pre-defined timeline. Those who want more information can read Furtak et. al’s (2016) full article.

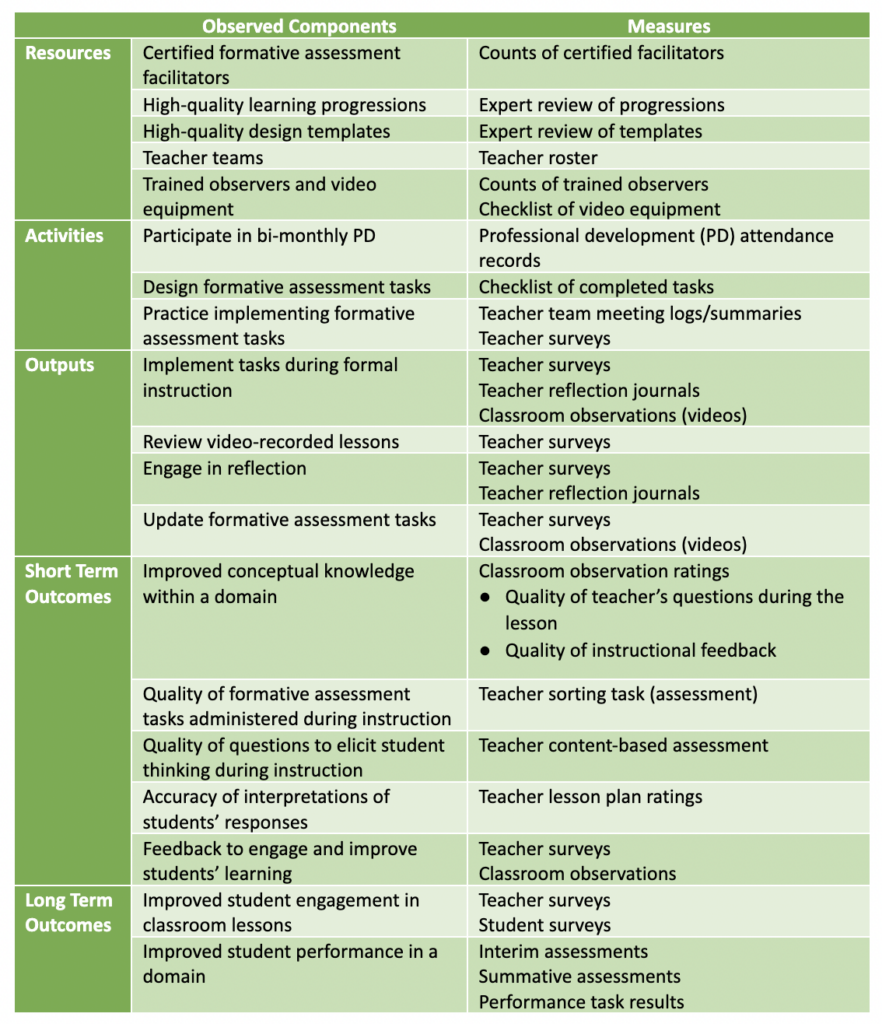

Measurement plan: Table 1 connects measures to each component in the logic model. Next to each heading in the logic model (column 1), components in the table are listed vertically in column 2 with each corresponding measure in column 3. Once the data are collected, school personnel can begin to examine the data to understand for whom, how, and under what conditions the program works. Questions they might ask of the results include:

- Do classroom teachers (or schools) have the required resources? Where are resources missing? What specific resources are missing or not meeting minimum specifications?

- Are teachers participating in the training? Is their knowledge and understanding of formative assessment increasing? Is their understanding of the content and how to effectively teach for deeper learning increasing?

- Among teachers who report high levels of implementation and efficacy, do we see a positive relationship between teachers who report high levels of implementation and requisite scores on their (a) design tasks and activities (e.g., lesson plans) and (b) third-party ratings of their classroom practices?

- What is the relationship between teachers’ implementation levels and students’ achievement levels on the unit test?

While questions that examine relationships along the causal chain may require a data expert (e.g., correlating teachers’ professional development (PD) attendance with the quality of their task designs), many other essential questions about implementation can be addressed through simple descriptive analysis using excel or Google forms (e.g., What percentage of teachers are regularly attending PD? Are they implementing design tasks? Are they applying formative practices during lessons?). Answering these types of implementation questions will go a long way toward improving practice and deepening students’ learning. The key is applying a thoughtful, thorough, and systematic process like the one described above.

Conclusion

Evaluation plays a critical role in learning and improving; it is perhaps the best and only way that we as educators can stop the cyclical loop of reinventing and renaming solutions to address complex problems. The problem of improvement is not about finding a silver-bullet solution; it is about understanding how to flexibly learn and adjust solutions to address the specific problems as they exist within a particular classroom or school. When robust evaluation methods are applied in an improvement paradigm, information can be acted upon at different levels of the system and used to address even our most complex problems of practice.